准备阶段

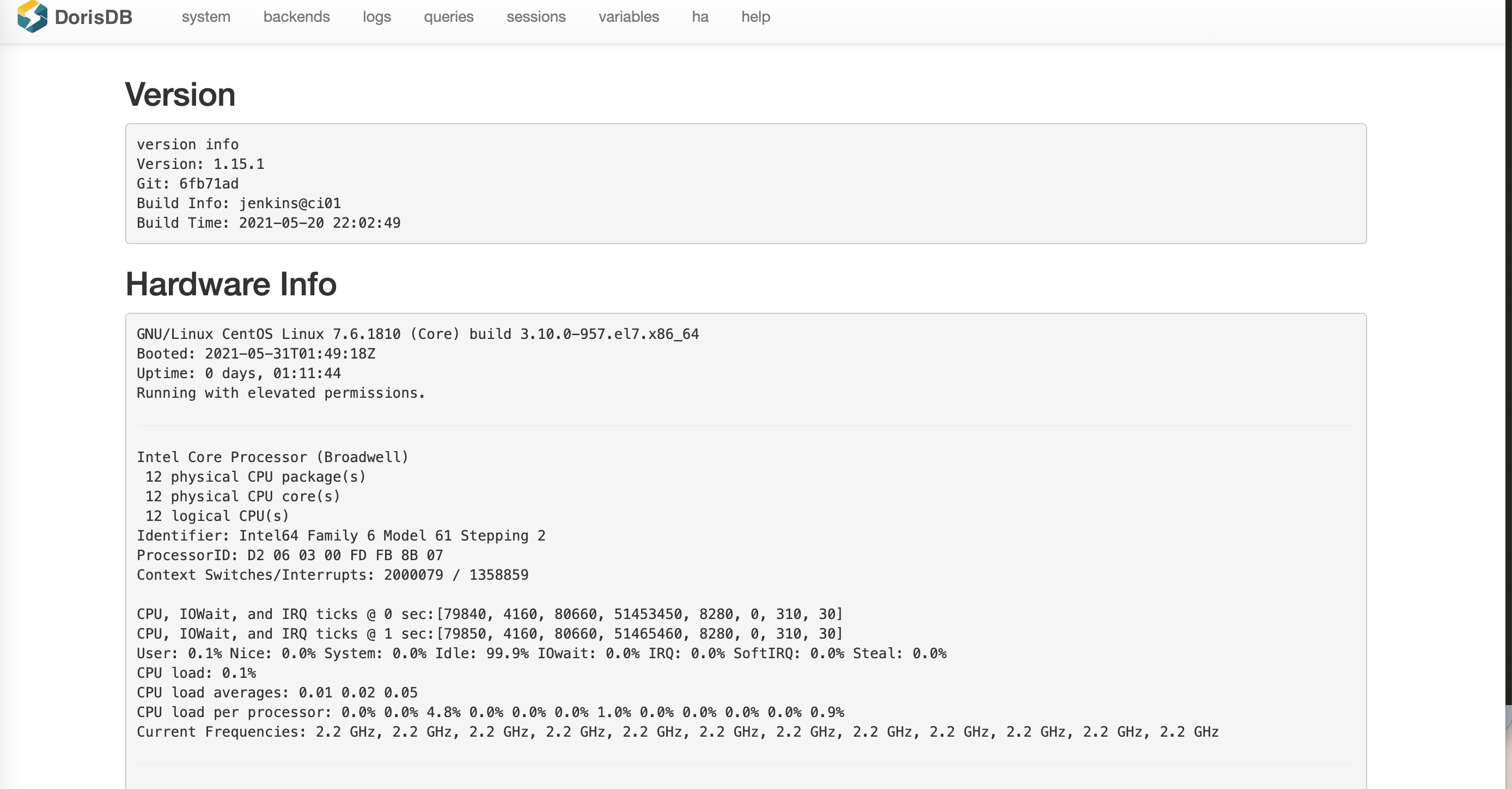

机器配置

| Cluster | CPU Cores | Mem | Disk |

|---|---|---|---|

| master | 8C Intel Core Processor (Haswell, IBRS) | 16G | 488G |

数据来源:

1 | http://goods.elasticsearch.dev.kisgame.net:9200/p_asset_record |

clickhouse的建表语句:

1 | CREATE TABLE default.asset_record |

1 | clickhouse-client --queries-file p_asset_record.sql |

1 | clickhouse-client -d mysql_database_31 --query "select id,member_id,card_no,order_code,shop_id,ref_member_id,create_time,flow_type,merchant_id,merchant_name,shop_code,shop_name,member_name,id_number,mobile_number,member_level,member_level_name,member_type,biz_code,amount,amount2,asset_id,asset_type,change_flag,param1,param2,param3,parent_asset_id,creator_id,creator_name,create_pos_id,create_pos_name,cashier_type,machine_id,machine_category_id,machine_category_name,machine_name,machine_instance_id,machine_instance_name,machine_instance_slot_id,device_id,device_name,record_status,record_seq,remark,machine_instance_slot_no,source_type,ref_asset_id,ref_asset_type,business_model,item_drop_type from asset_record where create_time >= '2021-05-01' and create_time < '2021-05-02'" > asset_record_2021-05-01.csv |

注意:

分区键、排序键不要设置为Nullable

分区的个数不要超过10000个,避免查询时,clickhouse加载过多的文件,导致性能降低

单个insert block中数据分区,不要超过一百个,否则会出现错误

1

too many partitions for single insert block (more than 100). the limit is controlled by 'max_partitions_per_insert_block' setting.

可以去/etc/clickhouse-server/user.xml的profiles->default中设置max_partitions_per_insert_block的合适的值,不用重启,立即生效

也可以max_bytes_to_merge_at_min_space_in_pool来控制merge的数据量及调整merge的线程数

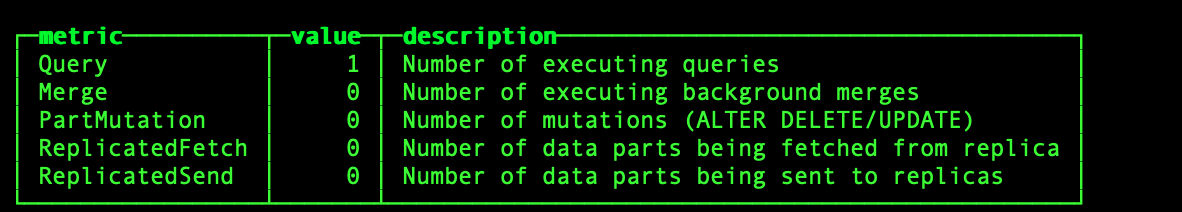

测试

数据准备

从tidb的数据库asset_record表中导出数据

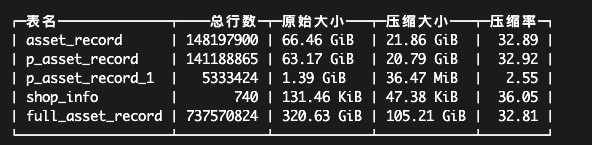

1 | SELECT table AS `表名`,sum( rows ) AS `总行数`,formatReadableSize ( sum( data_uncompressed_bytes ) ) AS `原始大小`,formatReadableSize ( sum( data_compressed_bytes ) ) AS `压缩后大小`,round( ( sum( data_compressed_bytes ) / sum( data_uncompressed_bytes ) ) * 100, 2 ) AS `压缩率` FROM system.parts WHERE database = 'default' GROUP BY table |

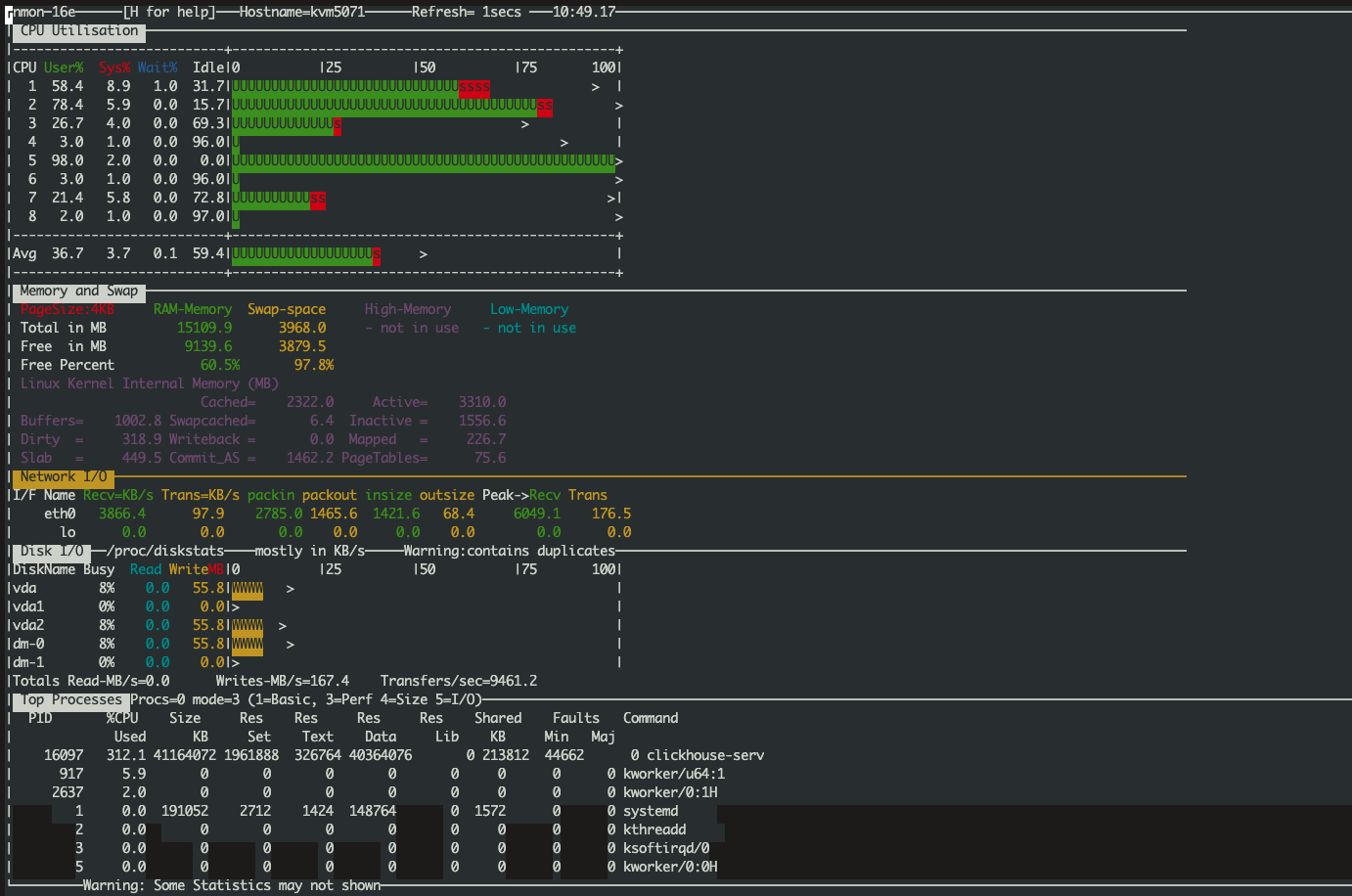

数据写入

insert query (用的场景很少)

库内拷贝速度 insert query 方式:

1 | INSERT INTO p_asset_record SELECT * FROM asset_record WHERE create_time < '2020-09-01' |

Progress: 13.33 million rows, 10.37 GB (312.73 thousand rows/s., 243.37 MB/s.

141188865行数据写入耗时,498.468 sec,约等于283245.595行/s(中间有merge的等待时间)

FLink的jdbc-connector

批次插入:50000rows/1s

295923920/(2422944323/1000)

通过表引擎去拉取数据

内存会被打满

查询场景测试

关联一个维度表:

维度表:shop_info(740)

1 | select shop_id,count(1) from asset_record a left join shop_info s on a.shop_id = s.shop_id group by shop_id; |

事实表:full_asset_record (737570824)

查询sql

1 | ## 查询店铺当天的流水记录(分页第一页,size:20) |

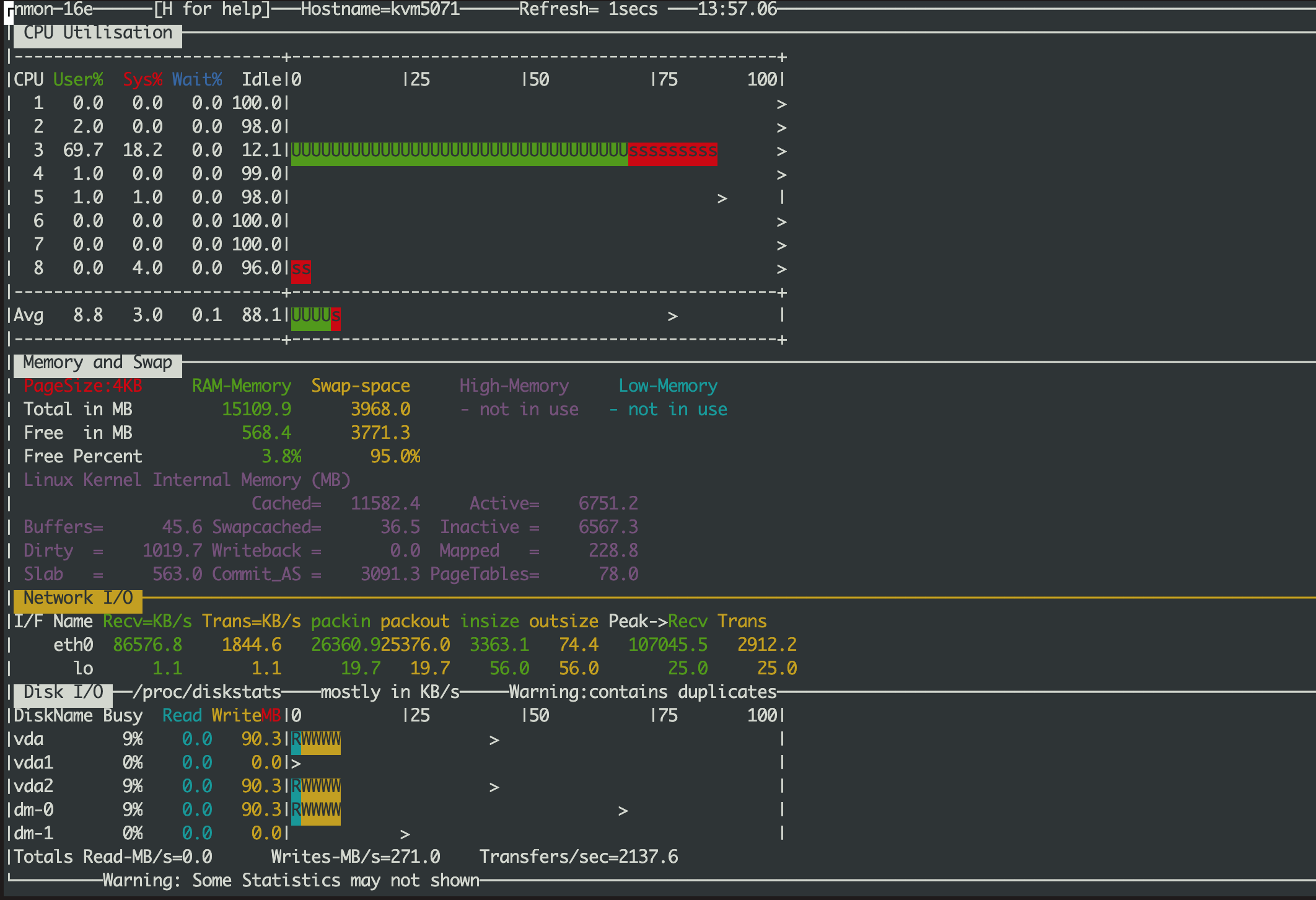

100QPS

1 | ClickHouse exception, code: 241, host: 10.200.50.71, port: 8123; Code: 241, e.displayText() = DB::Exception: Memory limit (total) exceeded: would use 13.28 GiB (attempt to allocate chunk of 4273219 bytes), maximum: 13.28 GiB: (while reading column biz_code): (while reading from part /var/lib/clickhouse/store/307/3075421c-dae3-4f15-bcea-63fde922ba82/202102_3285_3446_3/ from mark 1172 with max_rows_to_read = 8192): While executing MergeTreeReverse (version 21.4.6.55 (official build)) |